Conceptual illustration of physical input methods in Mixed Reality, from symbolic gestures to controllers, to direct-hand interactions.

CONVERSATIONAL UX AND EMBODIED DIGITAL AGENTS

Antique robot photograph, ‘Plutchik’s Psychoevolutionary theory of emotion’ reference, and conversation flow schematic for an embodied agent.

The goal is to make human - machine interactions natural and desirable. From voice agents with narrow domain expertise to embodied and expressive personalities, incorporating sentiment analysis, AI, Machine Learning, scene understanding, and sensor fusion approaches.

My Roles:

Creative Direction.

Agent persona and backstory development.

Multi-modal, sensor fusion input.

Authoring of speech prosody, NLU, and dialog trees.

Internal UX of ‘Custom Commands’ speech service.

GESTURE-BASED INTERACTIONS

Partnered with a technical designer, I designed a “bring to me” and “go back” hand gesture in conjunction with varying the semantic level of detail in the holographic UI associated with IoT devices (E.g. an audio player).

The system considers eye-gaze, hand tracking, and voice simultaneously to infer the object of intent, referred to as “multi-modal fusion”.

This familiar conversational gesture and its resulting action are delightful and are easily discovered and learned; an animated coach hand (hint) is incorporated into the UI surface.

DIRECT HAND INTERACTIONS: FUNDAMENTALS

Product image ©Microsoft

Directly touching reactive holograms, enabled by articulated hand tracking, represents an important step for instinctual interactions. As the HoloLens 2’s hand-tracking engineering was underway, I independently set out to identify the challenges and solutions.

Approach

Through close study of physical world near-interactions and hologram interactions, I found an important delta which created persceptual disconnections: Occlusion and shadows. Through self-directed prototypes and simulations, my results directly influenced the hand interaction approach that shipped in HoloLens 2.

Occlusion and depth cues

With additive light displays, holograms visually occlude physical objects, such as hands, causing the hand to appear to be behind the hologram even though it is actually in front of it. While creating a digital version of a hand to match the human hand would be a solution, the latency and small mismatch between the real and 3d hand introduce other disconnections.

My solution was to add a small holographic object to the tracking points at the finger tips. These holographic dots do not suffer from the occlusion problem and any drift in it’s position is small enough to be unnoticeable, since as a dot it isn’t attempting to match the hands topology.

Proximity cues

Since dark colors, like shadows, are rendered as transparent with additive light displays, we can use bright color to provide proximity cues; scaling these visuals with varying proximity lets us know when our fingers are close to touching a hologram (the “shadow” grows small and sharper the closer the finger is to the object.

DEXTEROUS HANDS IN VR/AR

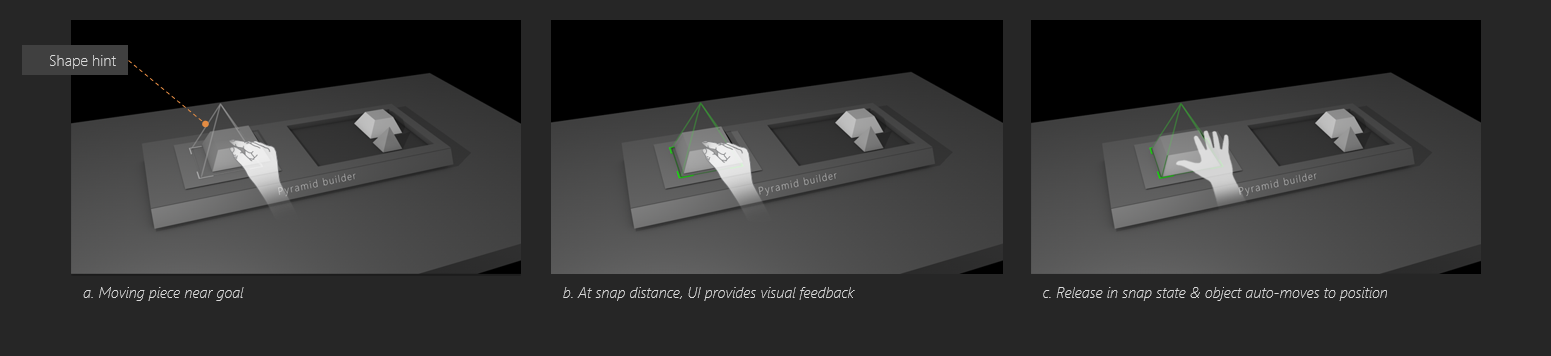

As hand tracking systems have accuracy limitations, I sought to understand the level of system-provided ‘snapping’ necessary for grasping and placing virtual objects with high precision, while maintaining a strong sense of agency.

Approach

I designed, illustrated and created 3D assets for three games spanning a range of system-provided assistance. A Leap Motion sensor attached to a VR headset was used for the tests. Two variables were tuned through the iterations: 1. proximity ‘snapping’ levels, and 2. visual/audio feedback.

Above, the illustrations and 3D assets were created in Cinema 4D, then a prototyping expert built the game in Unity3D.

VR Prototype

A working prototype recorded from a VR headset. In this clip, object snapping is set to a high level, making alignment and placement easy, but reducing the sense of agency.

HANDS, TOOLS, AND EXPRESSION

Hands

The human hand is a marvel, integrating dexterity and tactile sensitivity. As described in an introduction to Vernon Mountcastle’s, “The Sensory Hand”:

“With our hands, we feel, point, and reach; we determine the texture and shape of objects, we communicate and receive signs of approval, compassion, condolence, and encouragement, and, on a different register, rejection, threat, dislike, antagonism, and attack.”

As miraculous as the hand is, adding a well-designed tool elevates human capabilities immeasurably.

Hands and embodied communication

Making and organizing things aren’t the only things we do with hands and tools. We communicate with each other (and machines); point, grasp, throw, catch, and use symbolic and expressive gestures. Even the subtlest gestures we use are an extension of our expression; facial expression, voice-tone and pitch (prosody), and body language all contribute; and don’t forget context.

Designing a system that understands our hands and tools and personal expression, while enabling us to seamlessly, and at human-speed, switch and combine is a paramount challenge.

Tools and hands

In our every day lives, we switch between using our hands and hand tools uncountable times. Think of cleaning your kitchen after cooking a meal; moving countertop items with one hand and then seamlessly grasping a sponge with the other hand to swipe the counter, then cleanly pushing the crumbs into the free hand that’s now cupped at the edge of the counter. Similar things happen when drawing, cooking, making a home repair, or gardening. The examples are numerous.

Tools and hands in virtual worlds (AR/VR)

In virtual and augmented reality, we come across several types and shapes of controllers. Some are good at one thing but not another. That’s okay, as we don’t have a physical world tool that does everything well, either. For designers, the trick is in providing the means for people to effortlessly (no thinking required) switch from hands to hand tools, or even better, switch just a single hand to a physical tool and back. Ultimately, hand recognition would be so good and intuitive that we will won’t use physical controllers any longer; designers need to design for complex intents and actions.

Thanks for visiting.